Deep Learning 2 - Introduce the activation functions for neural network

Before we learn neural network, it is better to understand an activation function which converts input into output. Below is the other form of the perceptron equation in Deep Learning 1.

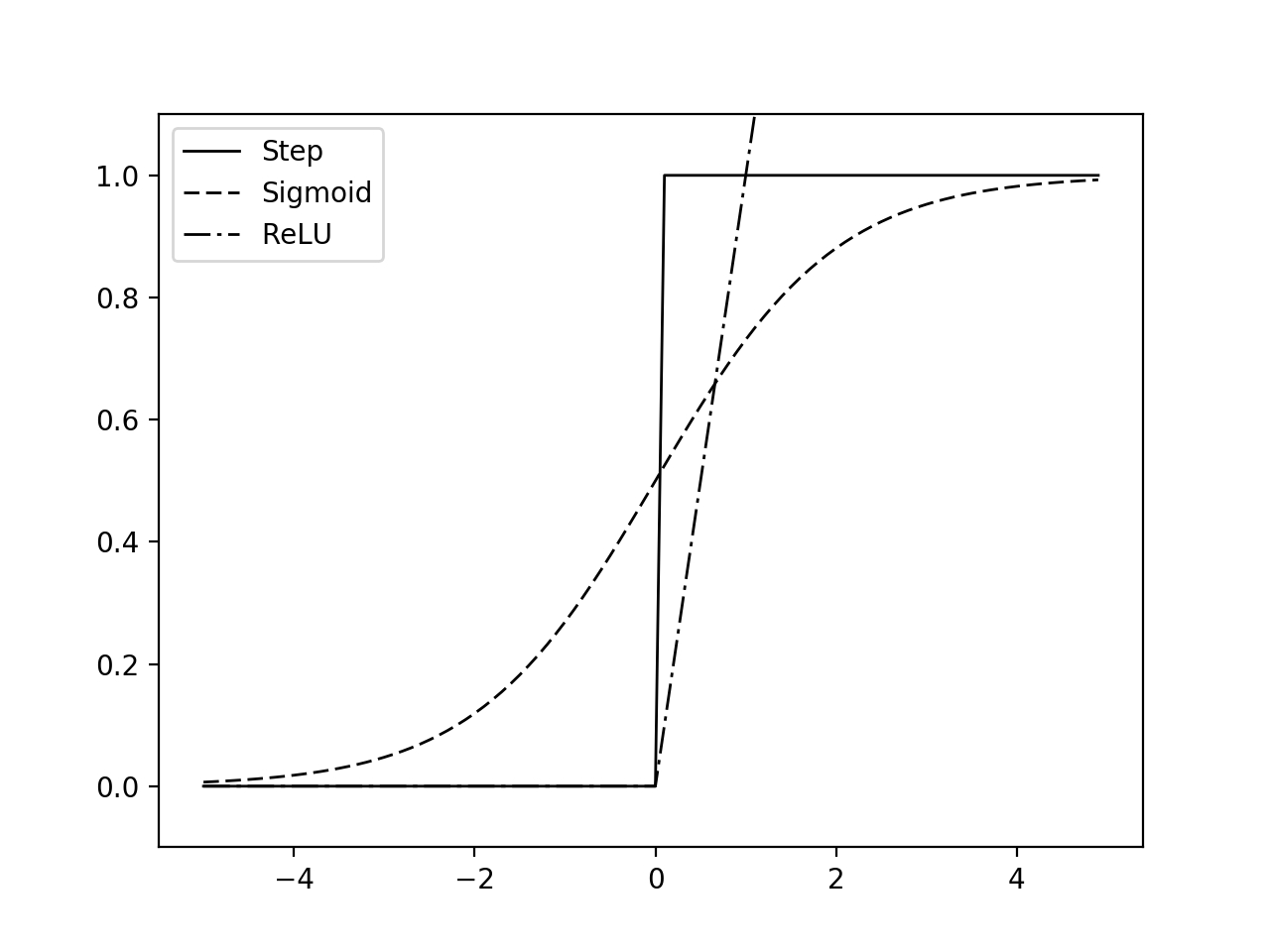

is the step function that is the simplest one. There are other common functions used for deep learning.

Sigmoid Function

\begin{equation} h(x) = \frac{1}{1+exp(-x)} \end{equation}

Reflected Liner Unit (ReLU)

They are non-linear functions, and it is important for neural network. This figure shows the functions.

You can plot it by the following code.

import numpy as np

import matplotlib.pylab as plt

def step(x):

return np.array(x > 0, dtype=np.int)

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def relu(x):

return np.maximum(0, x)

x = np.arange(-5.0, 5.0, 0.1)

y_step = step(x)

y_sigmoid = sigmoid(x)

y_relu = relu(x)

plt.plot(x, y_step, label='Step', color='k', lw=1, linestyle=None)

plt.plot(x, y_sigmoid, label='Sigmoid', color='k', lw=1, ls='--')

plt.plot(x, y_relu, label='ReLU', color='k', lw=1, linestyle='-.')

plt.ylim(-0.1, 1.1)

plt.legend()

plt.show()

The sample code is here.